is there any way to backup ghost database + config automatically to amazon s3 or anywhere else?

Is there not a method of backing up sites in Amazon S3? I did some Google searching and a few articles came up on how to make backups

AWS-CLI ?

I use a combination of automysqlbackup and AWS-CLI on some of my servers to backup to Amazon S3 and/or Backblaze

I’ve set up a bash script that runs once a day which will:

- Shut down Ghost via CLI

- Copy the /content directory to /backup

- Remove unnecessary files (logs for example)

- Compress into a single file (tar.gz in my case)

- Upload tar file to S3 bucket via AWS CLI

- Dump entire DB to a file

- Upload to db dump to S3 bucket via AWS CLI

- Start Ghost server via CLI

- Clean up files (copy of content dir, tar file, and db dump)

I can share my script with you if you’d like but you will need to make adjustments to make it work for your needs. My script doesn’t grab some of the other config files, it’s on my TODO list to back up my nginx config and Ghost configs.

thanks. I will look for it.

I didn’t know about AWS-CLI.

Thanks, that would be great. I just need the part about backup entire db as I don’t store my images on this server.

I tried some of the solutions and they didn’t work…

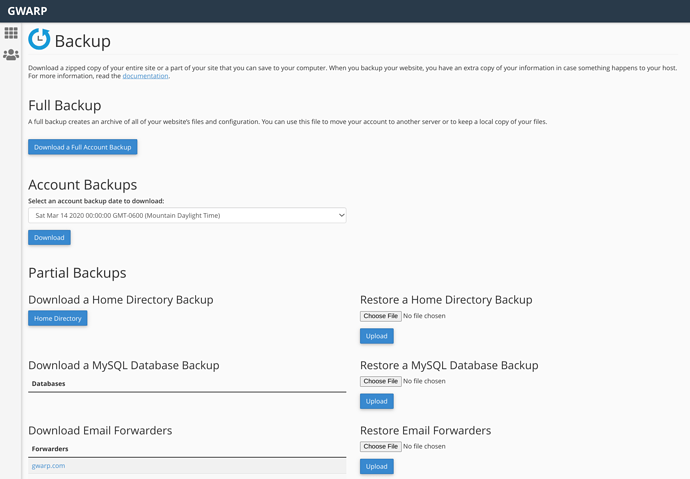

@moeen if all else fails, you can always set up automatic backups in WHM or cPanel if you install that on your server . . .

I don’t plan to install whm or cpanel on my server.

thanks

#!/bin/bash

echo "Stopping Ghost server..."

cd /var/www/ghost

ghost stop

echo "Ghost stopped"

echo "Copying /content directory..."

cp -ar /var/www/ghost/content /var/www/ghost/backup

echo "Backup complete"

echo "Removing /logs..."

rm -r /var/www/ghost/backup/logs

echo "Logs removed"

echo "Zipping content..."

tar -czf /var/www/ghost/ghost-backup.tar.gz /var/www/ghost/backup

echo "Zip complete"

echo "Uploading zip file to s3..."

aws s3 cp --no-progress /var/www/ghost/ghost-backup.tar.gz s3://yourbucket/ghost-backup.tar.gz

echo "Upload complete"

echo "Backing up database..."

mysqldump -u backups -pyourpassword your_db_name > /var/www/ghost/your_backup.sql

echo "Database backup complete"

echo "Uploading sql dump to s3..."

aws s3 cp --no-progress /var/www/ghost/your_backup.sql s3://planamag/your_backup.sql

echo "Starting Ghost server..."

ghost start

echo "Ghost started"

ghost status

echo "Removing backup files..."

rm /var/www/ghost/ghost-backup.tar.gz

rm /var/www/ghost/your_backup.sql

rm -r /var/www/ghost/backup

echo "Files removed, backup complete"

@moeen Here you are, sorry it took a while. I’ve been a bit busy. You’ll need to make changes depending on where you installed Ghost and what your db name is, your s3 bucket, etc…

Also, I created a user account (backups) with only read privileges so it’s relatively safe for me to pass in the plaintext password but it’s not a good idea in general. I would also recommend that you log any errors you might get so that you can debug if it’s not working.

I wrote a bash script that:

- uses

curlto get the database as JSON from the/ghost/api/v3/admin/db/endpoint - backs up images with

rsyncto the script directory for upload - uploads the backup to Google Drive (with Node.js) if there are changes

It compares the newly downloaded database with the previous one. The database changes every time you hit the API endpoint, so I make temporary copies and strip those values.

That lets me compare the databases with diff and only back up on changes (the original, un-stripped database is uploaded).

The end result is that my nightly cron job only uploads a backup if something has changed - images or the database.

@ijongkim @imyavetra both of your solutions seem interesting. Do you guys have a blog or step-by-step guide for them?

Hey! I documented my script, and the README on GitHub has instructions on how to set it up, but would be happy to write a quick blog post on setting it up.

Or, message me with any specific questions.